If you want to efficiently understand how your potential clients are searching for the products or services you are offering, Keyword Research is critical.

The keywords you are using for your site are possibly not the ones your potential clients are using to look for your services.

Good keyword research starts with gathering data: keywords currently bringing traffic, keywords with impressions but no clicks, PPC data if any, keywords used by competitors, Keyword tools

SEO Consulting

Search Engine optimization Consulting is about helping you rank well with all your content so that the investment you have put in your content marketing pays off.

With gained visibility, you will attract more visitors, month after month. In turn, the visitors generate customer inquiries - and thus more sales.

Get in touch

Owner @ SEO Berlino

SEO & Analytics Senior Consultant

E - [email protected]

Let's meet -Set up a 30 mn Call free of charge

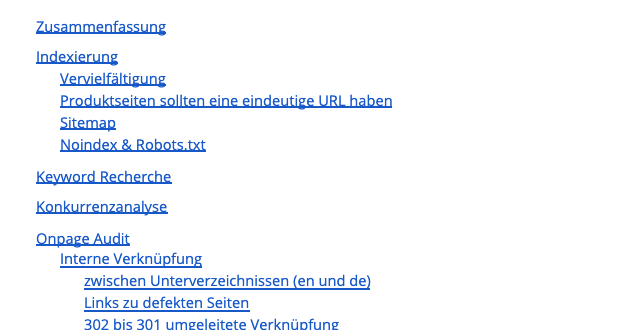

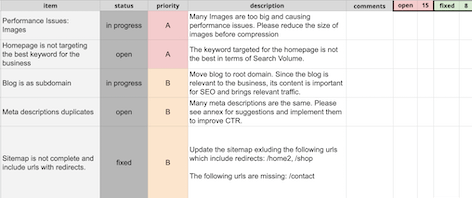

The SEO Check Audit follows the following format - although it can be adapted depending on the client's requirements and priorities: Executive Summary, Keyword Research, Competitor Analysis, On-page & Technical SEO, Backlink Profile Analysis. We also include a backlog (online Google sheet format) which takes all the items of Audit and classifies them by priority, with a short description, a column for the status which will change as the fixes is implemented and a comment section for communication.

An explanation of each issue, Priority, Solution, Explanation and Expected results, Comments section for communication.

SEO Audits Content

Keyword Research

Keyword Research Process

About Keyword Research

Which keywords have the most value for you?

Search Volume is not the most important factor, but if there is no Search Volume for the terms you want to optimize your site for, you are already making your life difficult.

Equally, targeting high competitive keywords with very high Search Volumes, or Keywords not related to products or services which you offer will cause problems. Set up a list of keywords: main keywords, broad terms, related terms, long tail, each type of keywords having different objectives.Also include voice search terms / questions

Voice Search is creating a lot of disruption in terms of keywords research since people use different phrases depending on whether they type of speak. In general voice search keywords are longer and they are in the form of a question.

Keyword Tools

Different types of SEO Consulting

One-time Consulting

One-time consultations are usually requested by companies which need to establish a strategy and a roadmap and have the internal resources and know-how to implement the improvements.

These one-off consultancies also happen for clients which need specific support for instance for indexation issues or page speed performance.

Continuous Consulting

Since SEO is a long time process, it generally preferable to have a continuous eye on SEO- Continuous Consulting can be tailor made to your needs prioritizing tasks and with monthly reporting .

SEO Consulting Services

SEO strategy and concept development

After the audit, we will create an action plan for Continuous SEO support including Regular monitoring & reporting. All measures can be documented and the results measured transparently. You will receive regular ranking and traffic evaluations from us in order to follow the progress of our campaign. In order to ideally turn visitors to your website into real customers of your company, we may also support you in analyzing and interpreting the user data.

Action Plan and Reporting

After the audit, we will create an action plan for Continuous SEO support including Regular monitoring & reporting. All measures can be documented and the results measured transparently. You will receive regular ranking and traffic evaluations from us in order to follow the progress of our campaign. In order to ideally turn visitors to your website into real customers of your company, we may also support you in analyzing and interpreting the user data.

Keyword research and competitor analysis

Choosing the right keywords is critically important for search engine optimization. The SEO Experts at SEO Berlino will work with you to decide which search terms should be used to find you on Google in order to increase the demand for your products or services in the long term. Search volume of the keywords and competitiveness for these terms must be considered. During the SEO consultation, we will define the keywords with you, which will serve as the basis for optimization.

A competitor analysis is to determine for instance how the performance of your website compares to the competition and determine which keywords are bringing them the most traffic. We can adapt the scope of the research depending on your needs.

Onpage and Technical SEO

On-page and Technical SEO primarily include measures that affect the optimization of the source code and content of the website. Content can be enriched with information and keywords that help improve the visibility of the individual landing pages in search engines. We take over the optimization of the meta information for you including improving page titles.

On a more technical level, we analyse the crawlability and indexation of your site. We need to make sure that we prevent cannibalization and competition within your own domain.

The content marketing services we offer are either the creation of effective and high-quality texts, or the revision of existing content with regard to internal linking, headings, images, text volume and quality.

Offpage SEO

As well as onpage, we will look at your backlink profile compared to competitors and help you set objectives and how to boost your domain authority and number of referring domains. We examine your link profile and highlight threats and opportunities.

Why you should use the SEO advice of the SEO Berlino

- Many years of experience in SEO consulting with over 50 clients

- Permanent and dedicated contact person with tailored-made SEO solutions

- Transparent work and monthly SEO reports

- Agile-inspired Backlog for a prioritized roadmap

On-page SEO Consulting

Get in touch

Owner @ SEO Berlino

SEO & Analytics Senior Consultant

E - [email protected]

Let's meet -Set up a 30 mn Call free of charge

Importance of on-page SEO

Why do people visit your site? Most likely because it contains information they’re looking for. Therefore you should write excellent content. Search engines like Google read your text. Which site ranks highest is for a large part based on the content of a website. That content should be about the right keywords, informative, and easy to read.

On-page SEO consists of all the elements of SEO you can control best. If you own a website, you can control the technical issues and the quality of your content. On-page issues should all be tackled as they’re in your own hands. If you create an awesome website, it will definitely start ranking. Focusing on on-page SEO will also increase the probability that your off-page SEO strategy will be successful. Link building with a poor site is a very tough job. Nobody wants to be linked to articles that are badly written or not interesting.

Important first step, keyword research is the process of understanding how your target users search for your product or service.

It has to be done before starting the on-page work. Competitor Analysis help to expand the research and make sure no important keyword is omitted. Although since Hummingbird, Google is more semantically driven to assess the website's content, and focussed on

Title

<title>My Unique Title</title>

Page titles are often neglected, but are really important and very simple to implement. That means you need to have them spot on. Each page should have unique titles, clearly stating the page main focus, using terms that users are searching, so according to your keywords list. This also applies for the homepage, your most important page. Unless you are a major brand with already huge brand-awareness, avoid using just your brand name but your most important Keyword which best describes your business.

Description

<meta name="description" content="my unique description" />

Meta descriptions are not required as such to have your page rank well. Even if your meta description is empty or missing, Google will take some text from the page and fill in the gap. If you have one but Google is not using it, that means Google did not find it relevant enough for the searched term. Either way, meta descriptions are important for CTR, searched terms if included in your description will appear in bold and you can also confirm to the user your entry is the most relevant for the search, and add that difference that will make the click happen.

Headings

<h1>my unique h1: page main topic</h1>

<h2>Title part 1</h2>

<h3>Subtitle 1</h3>

<h3>Subtitle 2</h3>

<h2>Title part 2</h2>

<h2>Title part 3</h2>

<h3>Subtitle 1</h3>

<h3>Subtitle 2</h3>

<h3>Subtitle 3</h3>

You should have one h1 per page with h1 being your main keyword for the page. Then use H2, H3, etc accordingly and logically, depending on the content

Social Media: OpenGraph & Twitter Card

<meta property="og:title" content="mytitle" />

<meta property="og:url" content="myurl" />

<meta property="og:description" content="mydescription" />

<meta property="og:locale" content="countrycode" />

<meta property="og:site_name" content="name" />

<meta name="twitter:card" content="summary" />

<meta name="twitter:site" content="@name" />

<meta name="twitter:title" content="mytitle" />

<meta name="twitter:description" content="mydescription" />

<meta name="twitter:image" content="/logo.jpg" />

Viewport

<meta name="viewport" content="width=device-width, initial-scale=1" />

Crawl your website

There are many tools out there such as Screaming Frog which can easily identify missing or empty metas, You can also identify and fix duplicate or unclear metas, those which are too long or too short.

Alt Attibutes

Description

alt attribute help Search Engines to understand the image they are crawling.

Alt stands for 'alternate'. It often called 'alt tag' but it is in fact an 'attribute'.

It is important to use alt attributes to describe your images to be in line with good SEO practices. Other image related optimization items are size & load time and image file name.

Image Search still represents for most industry an important SEO subchannel. Given how Image search now works, many Image visits are only virtual and therefore invisible in analytics reports. Image Search is still a fantastic opportunity to improve brand awareness.

<img src="/image.jpg" alt="yourimagealthere" title="furtherinfohere" />

Make sure images are well optimized: from image hosting to image alt tags. In case your website actively uses images, use Image Search to improve brand awareness.

Alt Attribute often called alt tag gives important information to the Google bot regarding what the picture is about. If the image path is incorrect, the alt (alternative) attribute will show instead.

Image title

The title appears as tooltip when there is a mouse-over above the image. Use it to give more information about the picture. Although not as important for SEO as the alt attribute, it should not be neglected. Avoid copy and pasting the same text you have as alt attribute.

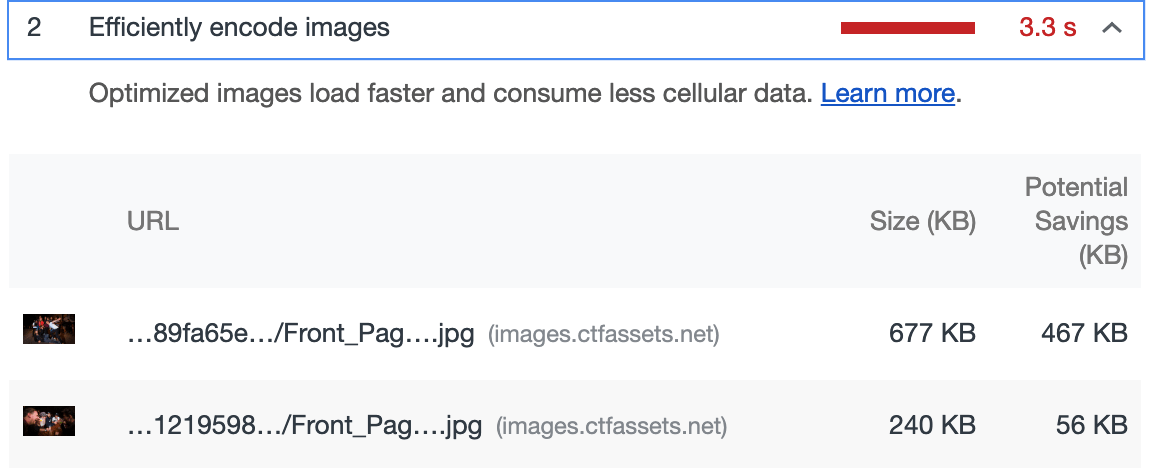

Image size and format

If your image is too big, this will affect pagespeed, especially for mobile traffic.

Internal Linking

Understand how the Google bot crawls your website

Internal Linking is core for on-page SEO, especially for big websites. Link juice redistribution, prioritization of key pages or site sections to name a few reasons. Google crawls websites by following links, internal and external, using a bot called Google bot. This bot arrives at the homepage of a website, starts to render the page and follows the first link. By following links Google can work out the relationship between the various pages, posts and other content. This way Google finds out which pages on your site cover similar subject matters.

It all begins with the homepage

Review and audit your current internal linking settings, including navigation, sub-navigations, breadcrumb and tags, taking into account business prioritization. In addition to understanding the relationship between content, Google divides link value between all links on a web page. Often, the homepage of a website has the greatest link value because it has the most backlinks. That link value will be shared between all the links found on that homepage. The link value passed to the following page will be divided between the links on that page, and so on. Therefore, your newest blog posts will get more link value if you link to them from the homepage, instead of only on the category page. And Google will find new posts quicker if they’re linked to from the homepage.

The ideal structure: a pyramid

The most important content should be on top of the pyramid, that being the Homepage. There should be lots of links to the most essential content from topically-related pages in the pyramid. However, you should also link from those top pages to subpages about related topics. Linking internally to related content shows Google what pages hold information about similar topics.

Top Navigation

Using a top navigation will give the most important posts or pages a lot of link value and makes them stronger in Google’s eyes.

Breadcrumbs

Breadcrumbs have two positive impact: for users: navigation and where they are in the site, and for SEO and boost internal linking and site mapping.

Indexation and Crawlability

Get in touch

Owner @ SEO Berlino

SEO & Analytics Senior Consultant

E - [email protected]

Let's meet -Set up a 30 mn Call free of charge

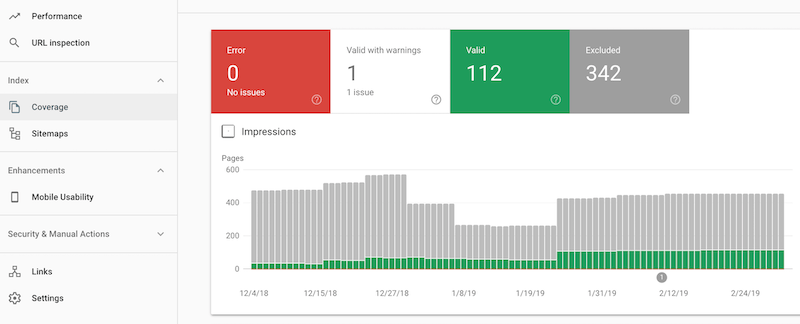

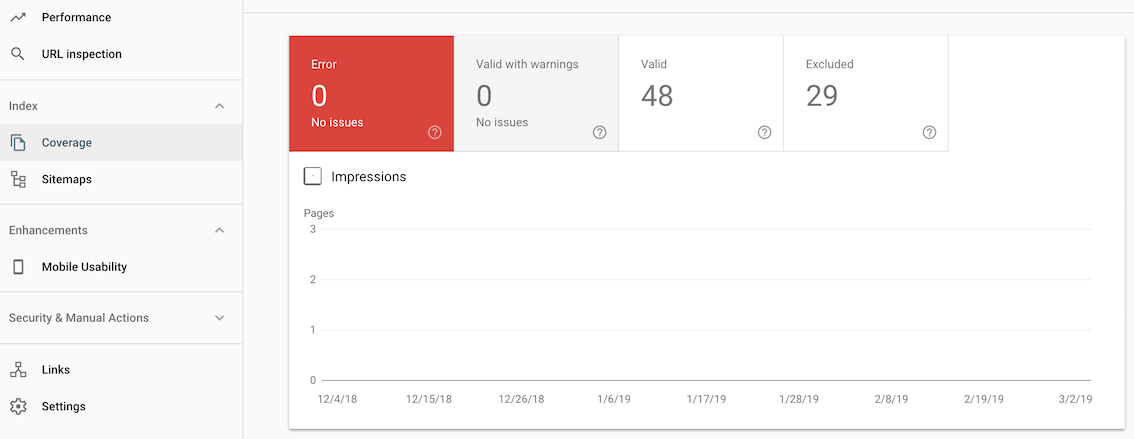

Indexation

Investigate your indexation

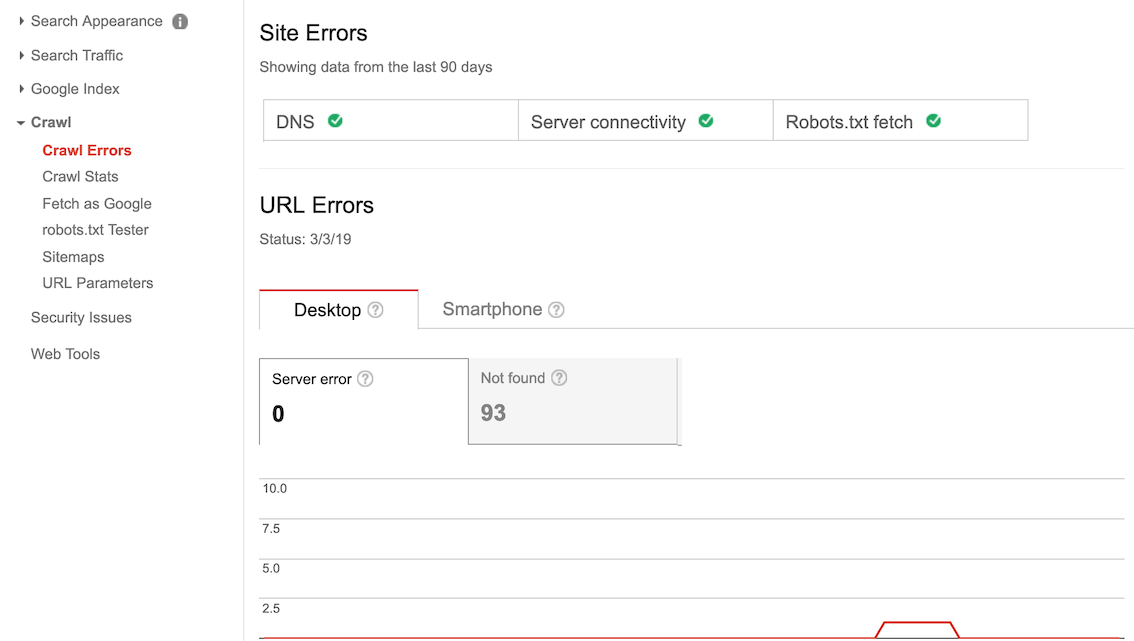

Make sure all the important pages are indexed and that those you do not want indexed are not. You can check the number of indexed pages in the Search Console, and also use Google commands with site:yoursite.com to check which pages are indexed.

Check on duplicate or irrelevant indexed pages and work on a plan to have those pages de-indexed. The best way is to return a 410 status codes for those unwanted pages till they are de-indexed.

Redirects

Check on your redirects, make sure you are using the correct status codes. Make sure you are limiting the number of redirects, if a big majority of the pages Google is crawling are redirects, or worse chains of redirects, your site will be negatively impacted by this.

Check metas

While you are auditing your indexation, check for metas. Are there any description /titles missing? Are they unique and all make sense targeting specific keywords which make sense to your overall strategy? It is not recommended to change url structure, but make sure you have a readable url structure, with as little special characters and numbers as possible. Preferably your URLs should be descriptive and as unique as possible. ,

Search Console

Crawling is directly related to indexing. To optimize indexing, you can guide Google on how to best crawl your site.

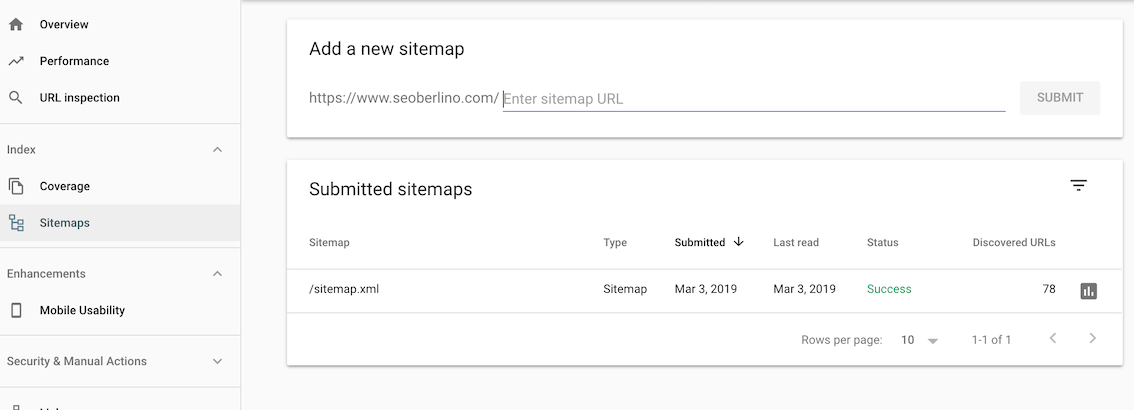

Sitemap(s)

xml format sitemap guides Google on how to crawl your site. Although Google says there is no guarantee the Google bot will follow your instructions, it is still highly recommended and in most cases, sitemaps are beneficial. It contains useful information about each page on your site, including when a page was last modified; what priority it has on your site; how frequently it is updated.

Robots

Robots set instructions depending on the user agent which parts of the site can be accessed. Making sure you are not excluding the relevant search engine bots is therefore of course paramount.

Metas

You can also guide the Google bot in the code with tags for each page the most common ones being: follow/ nofollow and index/ noindex

Search Console

You can directly submit urls to the Google index in the Google Search Console. This is particularly useful if you have crawling issues and there are some pages you want to have crawl and indexed in priority.

Google Crawl Credit

An important factor which links crawling and indexation is that for sites with many pages (indexed or not), there is a limit to how much your site will be crawled each time the Google bot visits your site. It is therefore important to keep an eye and understand which pages are indexed and why they need to be indexed (not all pages need to be indexed in particular in case of duplicate content)

Page Speed improvements

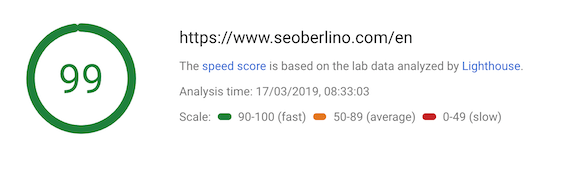

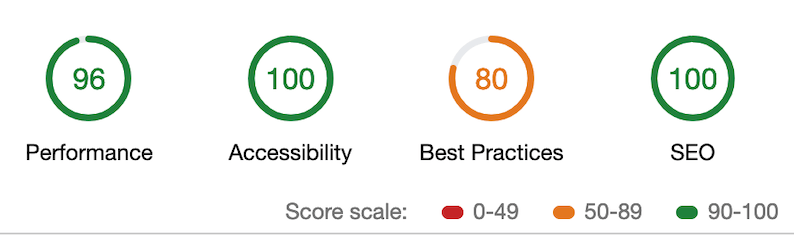

PageSpeed Insights

Description

Google PageSpeed is a family of tools by Google, designed to help webmasters improve their website's performance. PageSpeed Insights is an online tool which helps in identifying performance best practices on any given website, provides suggestions on a webpage’s optimizations, and suggests overall ideas of how to make a website faster.

Mobile / Desktop Score

Per URL request, it grades webpage performance on a scale from 1 to 100

Lab Data

Lab data is performance data collected within a controlled environment with predefined device and network settings. This offers reproducible results and debugging capabilities to help identify, isolate, and fix performance issues.

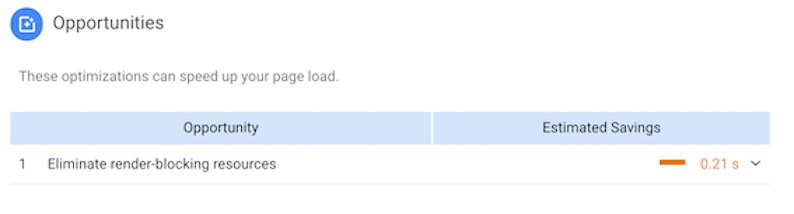

Opportunities

Opportunities provide suggestions how to improve the page’s performance metrics. Each suggestion in this section estimates how much faster the page will load if the improvement is implemented.

Diagnostics

Diagnostics provide additional information about how a page adheres to best practices for web development.

Ways to speed up your site

Use fast hosting, a fast DNS (‘domain name system’) provider

Minimise ‘HTTP requests - keep the use of scripts and plugins to a minimum, use one CSS stylesheet (the code which is used to tell a website browser how to display your website) instead of multiple CSS stylesheets or inline CSS

Ensure your image files are as small as possible (without being too pixelated)

Compress your web pages (this can be done using a tool called GZIP), Minify your site’s code - rid of any unnecessary spaces, line breaks or indentation in your HTML, CSS and Javascript (see Google’s Minify Resources page for help with this)

Negative SEO Impact for slow sites

If your pages are slow to load, Google will figure it out and will categorize your website as poor user experience, therefore suffering in terms of organic visibility. If you haven't done it yet, I strongly advise you to use the PageSpeed Insights tool: Google PageSpeed Insights

Relevant Tools and Resources

Please note that Lighthouse belongs to Google and their advice is (a bit too) specific to Google Chrome, so for instance it will advise you to use new image formats which may not be usable and rendered on other browser such as Firefox or Safari.

Lighthouse

Description

Lighthouse is a great tool to chek Performance. Pageload time is key in SEO and has a massive impact on rankings.

- Chrome > New Incognito Window

- Google Chrome Dev Tools > Audits

NB: Disqus & PageSpeed Test

Backlink Analysis • Linkbuilding Strategie

Get in touch

Owner @ SEO Berlino

SEO & Analytics Senior Consultant

E - [email protected]

Let's meet -Set up a 30 mn Call free of charge

Backlinks are still key in 2021

Like it has been the case since over 20 years, backlinks are key to SEO success and still define the reputation and popularity of your brand on the web. However, backlinks do not work like 20 years ago, and far from it. It is now much more about quality of the links and relevancy. It is crucial to understand where you stand, plan, execute and control your backlink activities.

Quality over quantity

Getting many backlinks from any website, paying links off from unrelated websites, or websites operating from a different country or with a different language are some example of wasted efforts. Most importantly, if the website which is linking to you has a poor reputation itself its value will be only minimum; worse if it is considered a spammy website, the link might even lower your website's reputation.

Getting a link from a reputable and relevant (to your industry) website is a great achievement. However keep in mind the actual page the link is appearing on is the real deal. If the page itself has little exposure, little number of internal links and/or traffic, its impact will not be as positive as if it was on the homepage for example. On the contrary, if it appears in every page of the site, this might seen as spammy backlinking so avoid that extreme as well.

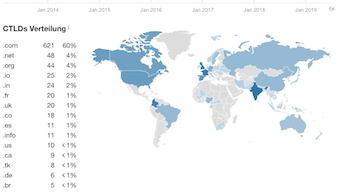

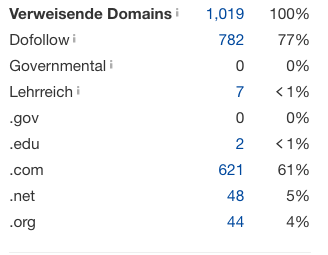

Competitors

It is very important and useful to look at your successful competitors to analyse: how they get their (high quality) links, the domain authority and quality of their backlink profile, ratio follow/ nofollow, anchor text, ratio quality links, number & quality of referring domains

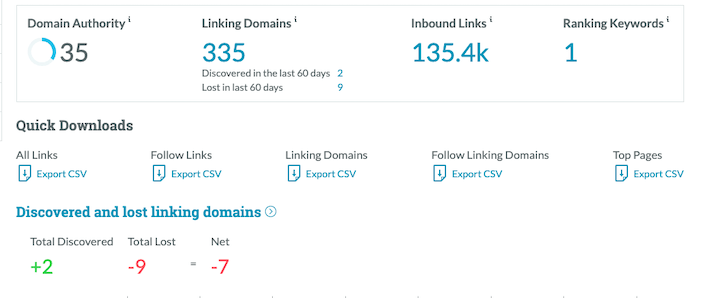

Backlink Analysis - Link Explorer

Link Explorer is a tool from Moz, previously known as Open Site Explorer. It is one of the main tools used in SEO to perform an Backlink Audit. Results vary from tool to tool although there majority of links found by each of the main tools (ahrefs, SEO Majestic, SEMRush to name a few).

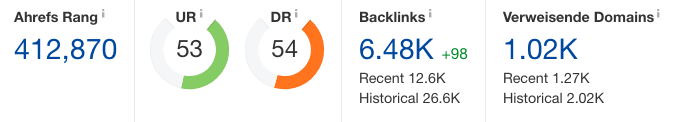

Backlink Analysis - Ahrefs

Anchor text need to be as natural as possible, avoiding click here or other misleading text. Very often the brand name is the most used anchor text. Make sure the ratio of nofollow links is not too high. nofollow means that the website linking does not want to commit the link as sign a trust and therefore pass link juice (authority). It is totally acceptable to have a certain percentage of nofollow links but if this ration gets to high, this is sign that your website is not trusted.

.gov and .edu sites have by essence a lot more trust and power as .com for instance. If you have the opportunity to get a link from such sites and it makes sense to your business - bringing in at the same time quality & relevant traffic - this link will very likely be beneficial.

Offpage & Backlinks

Offpage SEO refers to techniques that can be used to improve the position of a website in the search engine results page (SERPs). OffPage SEO has to do with promotion methods with the aim to get higher rankings in the SERPs. Unlike On-page SEO, Off-page SEO refers to activities which are performed outside the boundaries of the website. The most important are: Link Building, Social Media Marketing and Social bookmarking.

Many think the more links, the better but it doesn't actually work that way. Too many low quality and/or spammy links and your website will lose Google credibility and therefore visibility.

Since a few years already, you as website owner are responsible for the backlinks pointing to your site, so you need to constantly monitor, identify bad links and clean up your backlink profile. First step using the disavow tool then to try pro-actively to remove them.

Why is Offpage so important

Off page SEO gives search engines an indication on how other websites and users perceive the particular website. A website that is high quality and useful is more likely to have references (links) from other websites; more mentions on social media and it is more likely to be bookmarked and shared.

What is Link Building

Link building is the most popular and effective off-Page SEO method. By building external links to your website, your trying to gather votes of confidence from other relevant websites. Old methods which now do not work: blog directories, forum signatures, comment links, link exchange, Reality is quality of links than the number. To get quality links the best is to understand what content you can produce that will add value to potential customers and to influencers and then bring it to them:

Backlinks to avoid & Disavow Tool

Getting a backlink that appears on every page of a site is rarely a good idea especially if it not set as nofollow. First the position of the link on the page is a key factor so if your link is in the footer its positioning is all but optimal. Second, your numerous backlinks will in fact count as only one and its value will be minimal if negative. Other type of links to avoid: links from unrelated websites, from sites of different languages and/or countries and from websites flagged as spammy

Once you have identified those toxic links, the best is first to use Disavow Tool to inform Google you are aware of them and want to bring them to Google's attention. Please note that you need to be connected to the website's Search Console account in order to log into the tool. Google then advises you to actively get in touch with the respective webmasters and ask them to remove them.

Social Media & Social Bookmarking

Social Media is part of offpage SEO and a form of backlinking which could bring you traffic and recognition. Although most of the links from social media are nofollow they still have value. Social Media mentions are gaining ground as ranking factors and proper configuration of social media profiles can also boost SEO.

Social bookmarking is not as popular as it used to be in the past but it is still a good way to get traffic to your website. Depending on your niche you can find web sites like reddit.com, stumbleupon.com, scoop.it and delicious.com (to name a few) to promote your content.

SEO for Site Relaunch

Local SEO

Google MyBusiness

Especially for local business, it is paramount to align your SEO overall strategy to local SEO, keeping in mind that the most important factor in personalised search results is location. Being well optimized for Local SEO, especially in highly density locations, helps you take advantage of this specificity to guarantee you privileged visibility for people located around you

Get in touch

Owner @ SEO Berlino

SEO & Analytics Senior Consultant

E - [email protected]

Let's meet -Set up a 30 mn Call free of charge

Location: an important factor in search results

Especially for local business, it is paramount to align your SEO overall strategy to local SEO, keeping in mind that the most important factor in personalised search results is location. Being well optimized for Local SEO, especially in highly density locations, helps you take advantage of this specificity to guarantee you privileged visibility for people located around you

MyBusiness

To be present on Google Maps and on SERPs for local searches, activate a MyBusines account

Onpage Mentions

If you have a local business, like a shop, or have people visiting your office frequently, optimizing your website is also about making sure people are able to find you in real life. But even if your not actively getting visitors in your building, but are targeting an audience that is located in the same geographical area as you are, you need to optimize for that area. Ground-rule these days is that it’s by far the easiest to optimize if you have a proper address in a region/city. The thing is that if you want to optimize for, for instance, a service area that you are not located in physically, your main tool for optimization is content. You should simply write a lot about that area.

Offpage mentions count

Local SEO isn’t just about search engines. Yes, there is a lot you can do online to optimize your website for a local audience. But if you are running a local business, things like word-of-mouth and a print brochure also contribute to local SEO. One way or another, this will be visible to Google as well.

Relevant Tools and Resources

There are various options when operating internationally: same root domain, different top level domains, subdomains, how to link between them, how to simplify the process without negatively affecting your SEO.

An Audit and careful planning will help you set-up an effective SEO strategy depending on your requirements and resources. Make sure the language meta tags and settings the Search console are set-up, evaluate any possible SEO damage if using client-side rendering with a mixed url structure.

Same domain domains, multiple languages

If you are using the same root domain for internationalisation, you will be concentrating your backlink efforts to one main domain. However the complexity is to be dealt with. For instance hreflang need to be implemented, avoid any duplicate content, use canonicals when necessary.

One domain per language

If you are using different top level domains for internationalisation, there are pros and cons compared to same root, effectively easing complexity and building a local identity with the possibility to optimize server location. However, you will need to make sure to link them from your master domain, and manage different backlink strategies for each domain.

If you are using different top level domains for internationalisation, there are pros and cons compared to same root, effectively easing complexity and building a local identity with the possibility to optimize server location. However, you will need to make sure to link them from your master domain, and manage different backlink strategies for each domain.

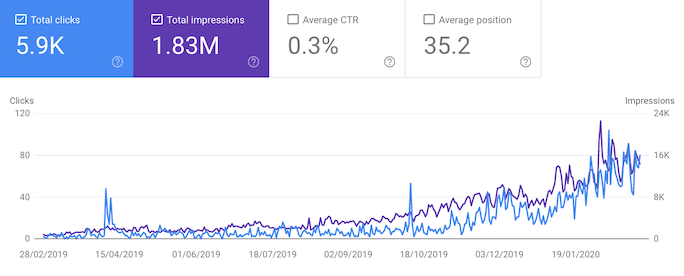

Success Stories

In one year for this client, with a strong Content Growth Strategy, traffic was multiplied by 10, that is excluding Brand keywords.

For this client, an audit showed that a new Keyword Targeting was needed, while better exposing important pages with more traffic potential, and improving technical settings and performance. Changes enabled the client to double its organic entries. Also it is important to note that brand terms traffic actually dropped during March to May compared to the previous period.

Relevant Tools and Resources